Evaluation

AI Judge

Evaluate models using AI feedback

Adaptive Engine allows you to evaluate models on logged interactions or datasets using AI feedback.

An AI Judge typically refers to using a powerful LLM to evaluate the performance of another LLM. Using LLMs as judges enables

cheap and effective model evaluation at scale, with little to no dependency on human automation and labor cost.

Adaptive includes both pre-built AI Judge evaluation recipes for common use cases (such as RAG),

and custom evaluation recipes where you can define your own evaluation criteria,

without having to deal with the complexities of an evaluation pipeline. It’s up to you to decide what model you want to use as a Judge,

which includes connected proprietary models.

Evaluation jobs output an average scalar score, and a textual judgement corresponding to the

AI Judge’s reasoning behind that score. You can view the evaluation score for each evaluated model in your use case page, and drill down on the judgements for individual

completions in the interaction store.

Adaptive Engine currently supports the following recipes:

See the SDK Reference for the full specification

on evaluation job creation.

Pre-built evaluation recipes

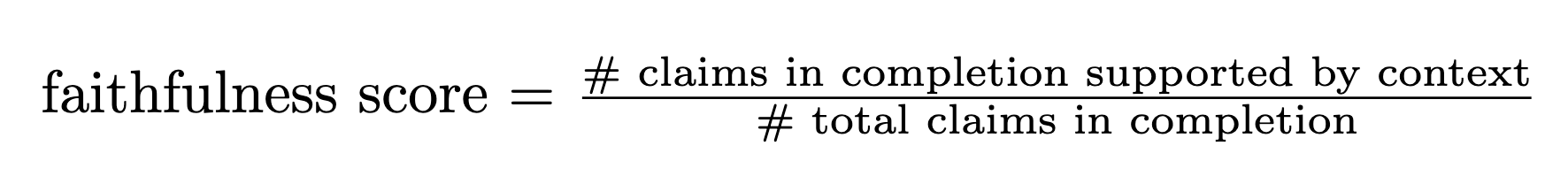

Faithfulness

Measures a completion’s adherence to the context or documents provided in the prompt. This metric is useful for grounded generation scenarios such as Retrieval-Augmented Generation (RAG). As a first step, the AI Judge breaks down the completion into individual atomic “claims”. The output score is an average, computed by the ratio of claims that are fully supported by the context / total number of claims made in the completion. The textual judgement attached to each completion indicates what parts of the completion specifically deviate from the provided context. The supporting documents must be passed indocument turns in the input messages. You can add all retrieved context in a single turn,

but preferably each retrieved chunk should be added as a separate turn.

Sample input/output

Sample input/output

Input messagesCompletionEval score and judgements

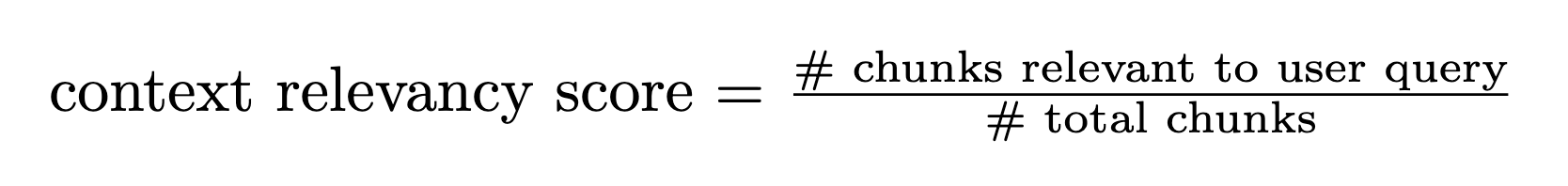

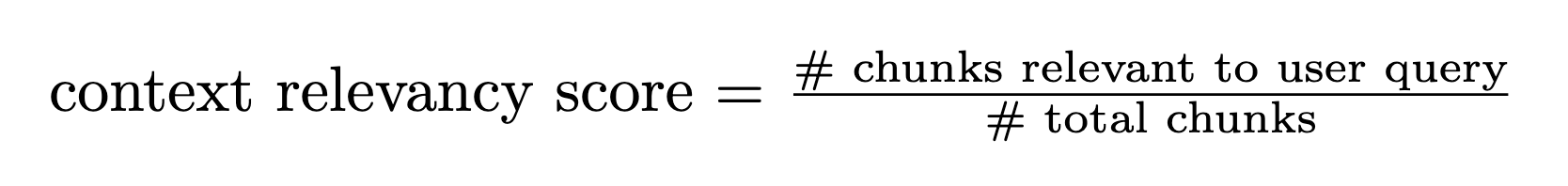

Context Relevancy

Measures the overall relevance of the information presented in supporting context/documents with regard to the prompt. This metric can be used as a proxy for the quality of your RAG pipeline. This evaluation recipe requires each retrieved chunk to be passed in a separatedocument turn in the input messages.

The output score is an average, computed by the ratio of chunks that contain information relevant to answering the user query / total number of input chunks

(which equals the # of document turns in the prompt).

Sample input/output

Sample input/output

Input messagesEval score and judgements

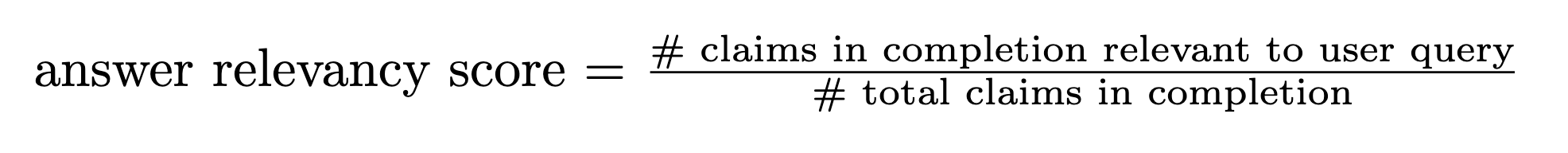

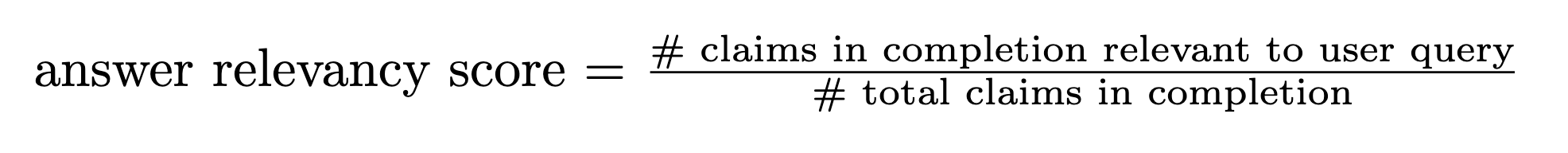

Answer relevancy

Measures the overall relevance/effectiveness of a completion when it comes to answering the user query. This metric is useful to evaluate the balance of conciseness vs. extra “chattiness” of completions. As a first step, the AI Judge breaks down the completion into individual atomic “claims”. The output score is an average, computed by the ratio of claims that directly answer the user’s query / total number of claims made in the completion.

Sample input/output

Sample input/output

Input messagesCompletionEval score and judgements

Custom evaluation recipes

Custom guidelines evaluation

Define your own evaluation guidelines. Guidelines are sets of individual evaluation criteria expressed in natural language, that aim to verify adherence to a specific behaviour. Guidelines should be phrased in a similar fashion to: “The completion must … replace desired behaviour”* (refer to Tips on writing effective evaluation guidelines below for examples). Completions will be evaluated against each individual guideline, and judged as compliant (score = 1) or not compliant (score = 0) with the guideline.

The output score is the average computed across all scores for individual guidelines.

The textual judgement attached to each completion contains one reasoning trace per guideline, indicating why the completion did or did not adhere to the guideline.

Sample input/output

Sample input/output

Input messagesCompletionEvaluation GuidelinesEval score and judgements

Tips on writing effective evaluation guidelines

Tips on writing effective evaluation guidelines

For best custom evaluation performance, it is recommended that each input guideline is atomic - i.e. evaluates a single behaviour -, and there is minimal overlap

in what is being evaluated across guidelines. This will guarantee that each desired behaviour is evaluated only once, and that the score for each behaviour is not affected

by small failures on additional directives bundled into the same guideline.✅ Example of good guidelines (atomic):❌ Example of bad guidelines (too broad/bundled):